Extending Ollama 🚀: Leverage the Power of Ollama and LiteLLM for OpenAI Compatibility

Seamless OpenAI Integration with Ollama and LiteLLM

What is Ollama Lacking?

Before we deep dive into extending Ollama, let me just state that I absolutely love using Ollama. The ease of installing it on my MacOS, and downloading the latest LLMs to running models like Yi and Mistral cannot be over-emphasised. On an exploratory basis, Ollama's existing functionalities serve its purpose. From running interactive chat with a model on the terminal to setting up a local open-source coding autopilot in VSCode, Ollama's CLI interface and REST API server work as expected when you need inputs from local LLMs. However, if you have been building LLM applications using GPT models from OpenAI, you would have realised that OpenAI API specification would be a requirement if you wanted the ease of a drop-in replacement of the OpenAI's model with other models, e.g., from Anthropic or Cohere.

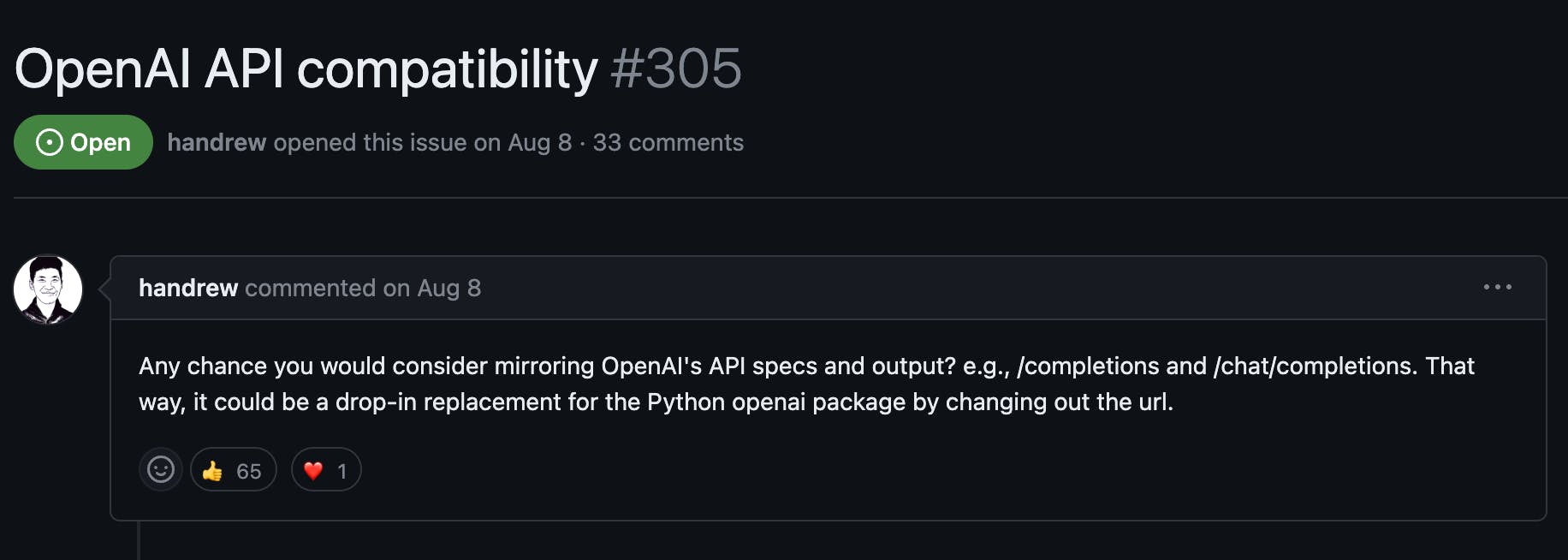

Unfortunately, Ollama's REST API server is not one of the OpenAI API-compatible servers at the moment. While there have been calls to make changes to the existing server to support better interoperability with other projects that use Python's OpenAI library, it seems that the change would likely take some time to actualise.

An alternative is to use other popular solutions such as llama.ccp, which already provides an OpenAI API-like experience through its Python bindings with llama-ccp-python library. However, I am definitely not keen to clutter my personal computer with more applications to run/host LLM locally (besides llama.cpp, other widely discussed tools include LM Studio, and LocalAI). As such, I needed to find a quick fix to enable easier drop-in replacement with Ollama as my local inference server.

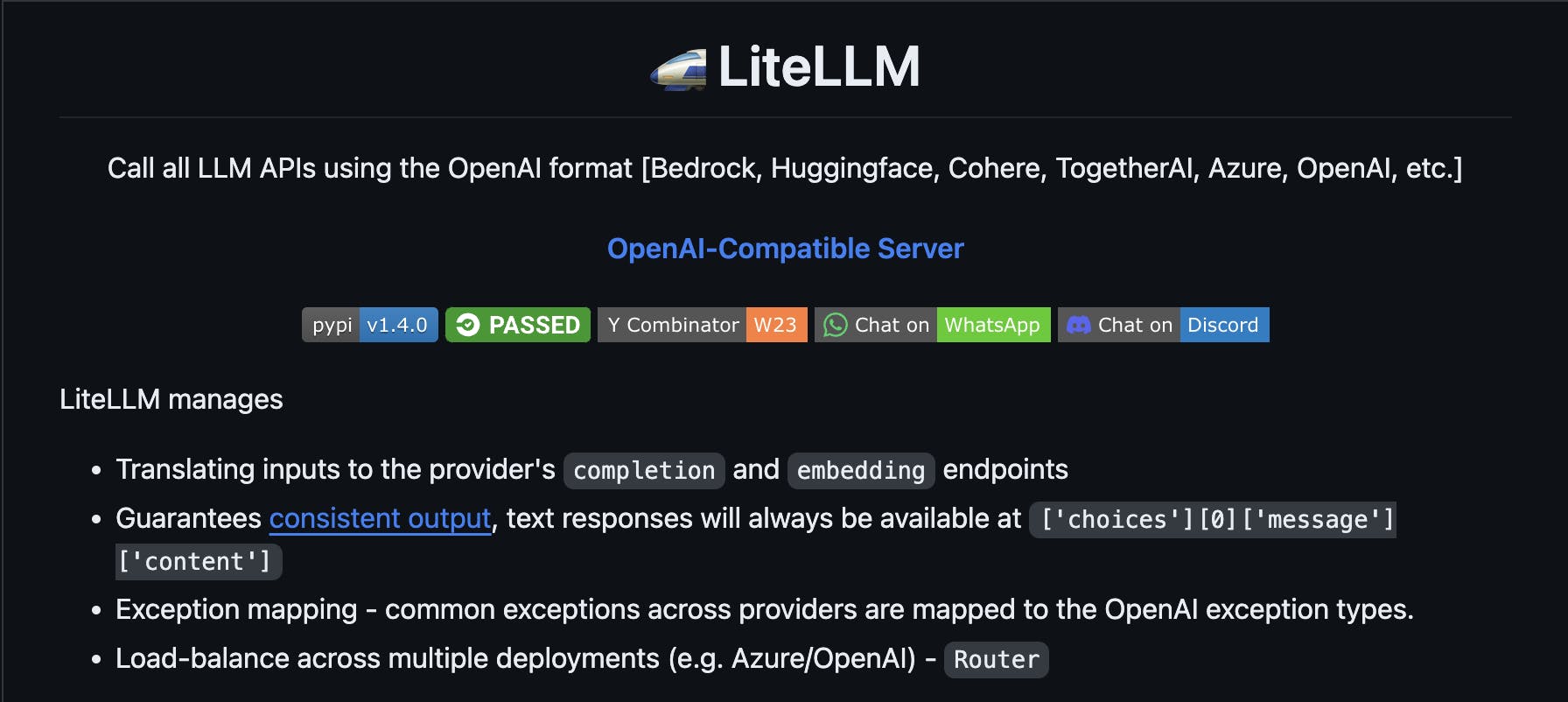

Introducing LiteLLM

In the very same GitHub issue that requested changes to the existing Ollama's REST API server, a user pointed out an interesting repository called LiteLLM that seems to be the key to extending Ollama's usage.

As proclaimed, LiteLLM extends functionalities for over 100+ LLMs to provide OpenAI API format during inference!

By providing a proxy server, LiteLLM enables calling non-OpenAI LLMs in the chat/completions and completions (legacy) format based on existing OpenAI API specifications. This allows previously non-compatible LLM servers to serve inference requests behind a proxy server that handles all the required input/output formatting to match existing OpenAI API chat completion and completion object. In turn, this allows greater interoperability in existing LLM applications that were previously built on only OpenAI models to now handle open-source models with no (minimal, if any) required code changes.

To illustrate, to generate output from an LLM from OpenAI, you will need to call endpoints with suffixes of v1/chat/completions or v1/completions. Compared to Ollama's server, where we generate inference with our local server at api/generate, e.g.,

curl --location 'http://0.0.0.0:11434/api/generate' \

--header 'Content-Type: application/json' \

--data ' {

"model": "deepseek-coder:latest",

"prompt": "what llm are you",

"stream": false

}

'

This presents the first issue as we will first need to re-route traffic from the LLM applications requesting the OpenAI API endpoints to our endpoint. In addition, responses between an OpenAI model may also differ from the open-source model. For instance, an OpenAI API chat completion object represents the chat completion response return by their model, e.g.,

{

"id": "chatcmpl-123",

"object": "chat.completion",

"created": 1677652288,

"model": "gpt-3.5-turbo-0613",

"system_fingerprint": "fp_44709d6fcb",

"choices": [{

"index": 0,

"message": {

"role": "assistant",

"content": "\n\nHello there, how may I assist you today?",

},

"finish_reason": "stop"

}],

"usage": {

"prompt_tokens": 9,

"completion_tokens": 12,

"total_tokens": 21

}

}

Comparing the above output, to the one returned from a model served using Ollama, e.g.,:

{

"model": "deepseek-coder:latest",

"created_at": "2023-11-23T19:23:37.509719Z",

"response": "I'm an AI Programming Assistant based on Deepseek's DeepSeek Coder model designed for answering computer science related queries in a human-like way. I don't have emotions or personal experiences, so my responses will be general and abstract about the topic of programming assistantism with technology rather than specific to any individual named entity like 'LLM'.\n",

"done": true,

"context": [

2042,

417,

274,

...,

],

"total_duration": 642237750,

"load_duration": 1027875,

"prompt_eval_count": 1,

"eval_count": 77,

"eval_duration": 625733000

}

This meant that without a solution like LiteLLM's proxy server, a developer building LLM applications that started with OpenAI's model will need to make significant code changes to adapt to other open-source models, which may all differ from each other in terms of required inputs and outputs. As such, by standardising the whole inference process based on the OpenAI API specifications, we can achieve greater interoperability between a wider variety of LLM models that can be used in a single LLM application.

Hands-on: Creating an OpenAI Proxy Server to Host LLM(s) with Ollama

To get started with LiteLLM, I highly recommend pip install the library in a virtual environment, or even better – with a dependency/package manager like Poetry. You can install LiteLLM with Poetry with the following:

mkdir litellm-proxy && cd litellm-proxy

poetry add litellm@latest

Ensure that the version of LiteLLM installed is minimally at v1.3.2, otherwise it involves extra steps to set up multiple LLMs running locally with Ollama (will explain more of it later).

Once LiteLLM is installed, setting up an OpenAI proxy server for a single LLM is a straightforward task. The process is fully handled through LiteLLM's proxy CLI command:

poetry run litellm --model ollama/<model-name>

# e.g.,

poetry run litellm --model ollama/codellama:13b

The

--modelflag indicates the model you would to start the proxy server with- For Ollama models, remember to prefix

ollamabefore the model name as it is used as an indicator to start the Ollama server (ollama serve) as shown here.

- For Ollama models, remember to prefix

If the model is installed locally via Ollama, you should be greeted by the startup message of the proxy server, now running and listening @ localhost:8000:

INFO: Started server process [2624]

INFO: Waiting for application startup.

#------------------------------------------------------------#

# #

# 'This product would be better if...' #

# https://github.com/BerriAI/litellm/issues/new #

# #

#------------------------------------------------------------#

Thank you for using LiteLLM! - Krrish & Ishaan

Give Feedback / Get Help: https://github.com/BerriAI/litellm/issues/new

LiteLLM: Test your local proxy with: "litellm --test" This runs an openai.ChatCompletion request to your proxy [In a new terminal tab]

LiteLLM: Curl Command Test for your local proxy

curl --location 'http://0.0.0.0:8000/chat/completions' \

--header 'Content-Type: application/json' \

--data ' {

"model": "gpt-3.5-turbo",

"messages": [

{

"role": "user",

"content": "what llm are you"

}

]

}'

Docs: https://docs.litellm.ai/docs/simple_proxy

INFO: Application startup complete.

INFO: Uvicorn running on http://0.0.0.0:8000 (Press CTRL+C to quit)

To test if the proxy is working as expected, you can simply make a curl command to the endpoint:

curl --location 'http://0.0.0.0:8000/chat/completions' \

--header 'Content-Type: application/json' \

--data ' {

"model": "ollama/codellama:13b",

"messages": [

{

"role": "user",

"content": "what llm are you"

}

],

}

'

It returns the output as an OpenAI chat completion object:

{

"id": "chatcmpl-55b58050-9682-43e1-8a25-490fd3338b61",

"choices": [

{

"finish_reason": null,

"index": 0,

"message": {

"content": " Im just an AI, not a real person. I dont have a physical body or personal experiences like humans do. However, I am designed to simulate conversation and answer questions to the best of my ability based on my programming and training data.",

"role": "assistant"

}

}

],

"created": 1700812680,

"model": "ollama/codellama:13b",

"object": "chat.completion",

"system_fingerprint": null,

"usage": {

"prompt_tokens": 5,

"completion_tokens": 50,

"total_tokens": 55

}

}

With just a simple command, we have successfully started up an OpenAI proxy server that manages to handle all necessary transformations for the Ollama server to receive requests and return the responses based on the OpenAI API specification.

Another wonderful feature of LiteLLM is the ability for us to host multiple models within the same OpenAI proxy server. This allows for load balancing between multiple locally-hosted models or even, routing between multiple models based on rate-limit awareness. However, proxying multiple Ollama models before v1.3.2 was missing a key functionality.

Unlike how it was like running a single model when hosting multiple Ollama models, LiteLLM does not automatically start the Ollama server in the background on a new process. This meant that we would need to start an Ollama server in a separate terminal tab using ollama serve to allow LiteLLM to connect to Ollama's endpoint @ localhost:11434. However, thanks to the co-founder of LiteLLM for responding promptly, this minor issue was resolved within a day.

To deploy multiple models, we will need a new config file, config.yaml:

# config.yaml

model_list:

# model alias

- model_name: deepseek-coder

litellm_params:

# actual model name like single model

# ollama/<ollama-model-name>

model: ollama/deepseek-coder:latest

- model_name: codellama

litellm_params:

model: ollama/codellama:13b

In this case, we are attempting to deploy two models that are installed on my local machine. By default, the first model in the config file will be the default model. In this case, the default model is deepseek-coder. In addition, there exists a difference between the keys model_name and model, where model_name is the customer-facing name for our deployment. This means that when the user calls for deepseek-coder, we are routing the request to call for ollama/deepseek-coder:latest instead.

To start the proxy server, we can just pass in the config file instead of a single model name under the --model flag:

poetry run litellm --config config.yaml

If all goes well, you will be greeted with a familiar starting terminal screen. However, you will realise that both models that were specified in the config file were stated to have been initialized with the config:

LiteLLM: Proxy initialized with Config, Set models:

deepseek-coder

codellama

Like before for a single model, to test if the proxy server works, we can run two separate curl commands to see if each model has been set up properly.

# for deepseek-coder

curl --location 'http://0.0.0.0:8000/chat/completions' \

--header 'Content-Type: application/json' \

--data ' {

"model": "deepseek-coder",

"messages": [

{

"role": "user",

"content": "what llm are you"

}

],

}

'

{

"id": "chatcmpl-3905154e-c19e-42c1-b6c9-4756cd15b131",

"choices": [

{

"finish_reason": null,

"index": 0,

"message": {

"content": "I'm an AI Programming Assistant based on Deepseek's Coder model developed by the company deepSeek for answering queries related to computer programming languages and technologies in general scenarios such as algorithm development, code optimization tools etc. My purpose is not just about solving technical problems but also helping users understand complex concepts at a high level through simple language models like me!\n",

"role": "assistant"

}

}

],

"created": 1700814872,

"model": "ollama/deepseek-coder:latest",

"object": "chat.completion",

"system_fingerprint": null,

"usage": {

"prompt_tokens": 5,

"completion_tokens": 70,

"total_tokens": 75

}

}

# for code-llama

curl --location 'http://0.0.0.0:8000/chat/completions' \

--header 'Content-Type: application/json' \

--data ' {

"model": "codellama",

"messages": [

{

"role": "user",

"content": "what llm are you"

}

],

}

'

{

"id": "chatcmpl-beb3e283-40ce-4fa0-a0de-8a08e9bcdbf0",

"choices": [

{

"finish_reason": null,

"index": 0,

"message": {

"content": " Im just an AI, so I dont have a specific LLM (Law Library and Media) or other library collection. However, there are many LLM collections available online, such as those maintained by law schools and legal organizations. These collections often include legal research materials, such as cases, statutes, and regulations, as well as other resources, such as legal blogs, news articles, and podcasts.",

"role": "assistant"

}

}

],

"created": 1700815040,

"model": "ollama/codellama:13b",

"object": "chat.completion",

"system_fingerprint": null,

"usage": {

"prompt_tokens": 5,

"completion_tokens": 82,

"total_tokens": 87

}

}

As you observed, we have successfully proxied two Ollama models with LiteLLM's proxy server, allowing us to interact with existing Ollama models in the same way we interact with OpenAI's model.

That's all for this article. Now, go out and build more amazing LLM applications with expanded accessibility to open-source LLM models 🔥!